Can we trust AI 100%?

Even though you’ve not realized it, AI has changed every aspect of our lives. Maps app predicts the traffic and offers you the fastest route while you’re trying to arrive at your meeting. The dress you want to buy appears on the ads box of a random website. Netflix recommends the show from your favorite genre. These are just a few of endless examples about how AI is making our daily life much easier. But the fact that AI driven applications are already used in numerous areas, doesn’t mean that they always work perfectly.

Not being understood by virtual assistants of banking applications, racist eliminations made by AI powered recruitment systems or the inability of facial recognition tools to recognize you when you wear a mask are some of the problems we encounter if the AI driven applications are not developed in a desired way. One of the things that needs to be done to avoid such problems is that the data set that ensures to train the AI model used by the application is annotated by a successful and experienced team. With the help of data annotation, it is provided to categorize the data set that is used while the development of an AI model properly. If it is not done as required, the AI model won’t work as expected. Besides, the rare “edge cases” that AI has not faced before cause AI to make mistakes. Let’s examine together the problems that may arise when the artificial intelligence model is not trained with a good enough data set or encountered a new scenario.

Tesla Autopilot Confuses Irrelevant Objects With Traffic Signs

It is exciting that the autopilot technology which Tesla has already made available to the users is an amazing system that will save us from the stress of driving in crowded cities or on long journeys. It may seem incredible to be this close to the future where your car takes you wherever you want 100% autonomously, but there are many unusual edge cases that the AI driven autopilot software of Tesla has yet to face.

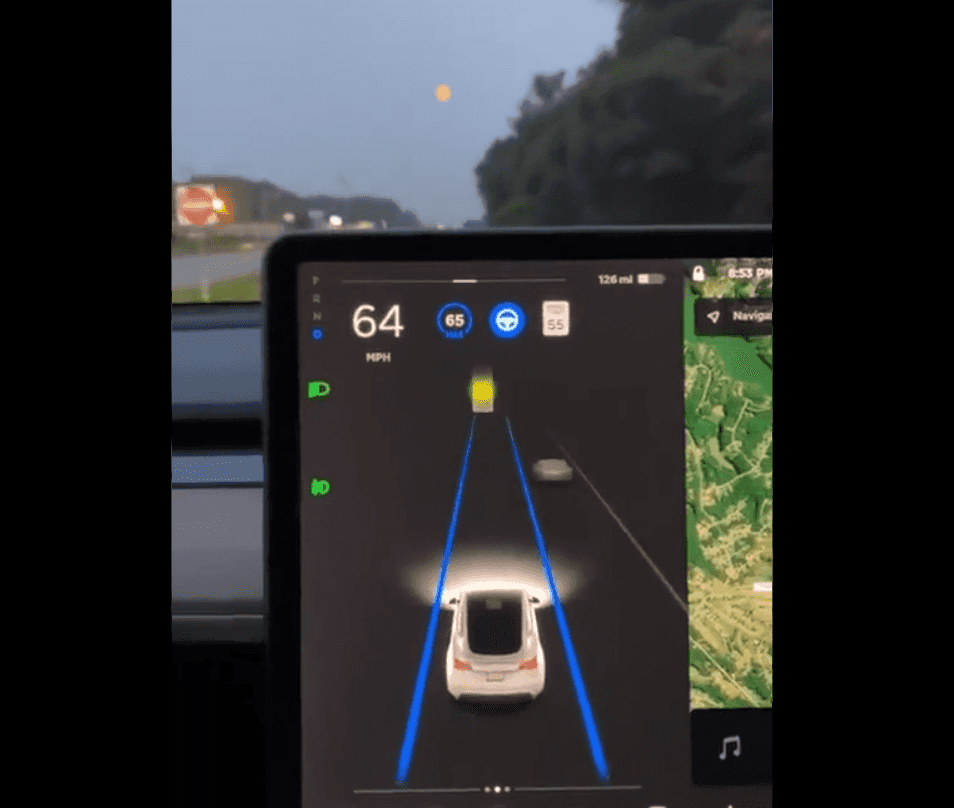

In the past months, a Tesla driver noticed something odd while his car was cruising on autopilot mode: Tesla was confusing the full moon with a never ending yellow traffic light. The fact that the moon was yellow and kinda low in the sky, caused the autonomous driving software of Tesla to perceive it as a yellow traffic light. Fortunately, the only negative side of this odd incident the driver was experiencing was that the car constantly kept its low, as if the red traffic light would turn on soon.

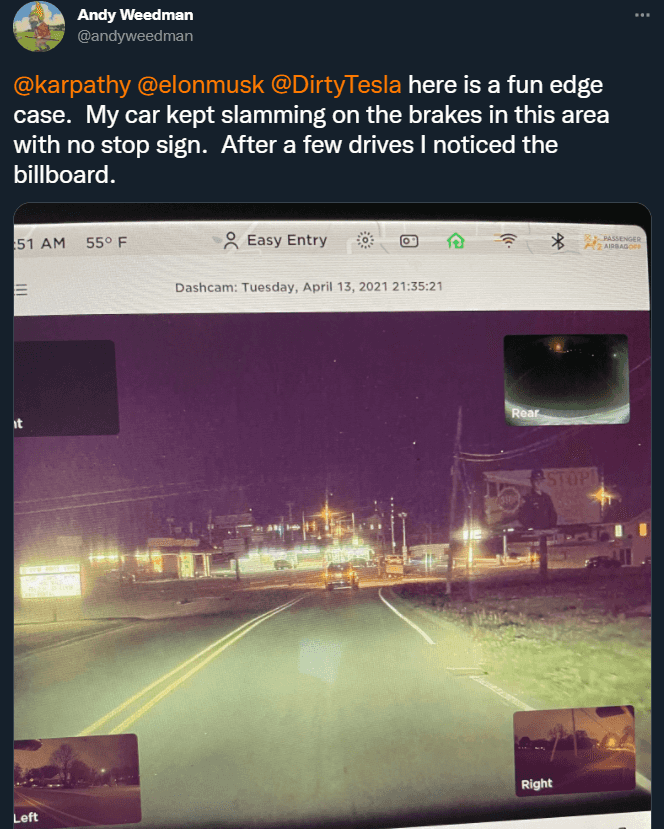

A Twitter user called Andy Weedman has faced another problem while driving his Tesla: the car was slamming on the brakes always at the same point on the highway. After a while, he discovered that the source of this problem was a billboard at the point where the autopilot suddenly braked. There was a giant STOP sign on the billboard ad and the autopilot software of Tesla confused it with a real traffic sign.

The Biased Algorithm of Google

AI driven logo recognition tools can be used for counterfeit goods detection. According to a research from Incopro, 66% of customers lose their trust in a brand after buying the counterfeit product unintentionally. For this reason, detecting and getting rid of the counterfeit products has a critical importance for protecting the brand’s reputation. Using AI driven logo recognition tools, it can be detected whether a product logo used in an online shopping platform or an ad is original or not. By taking action to stop the sales of counterfeit products that are detected, the company protects the brand’s reputation and prevents loss of earnings.

Social Media Monitoring

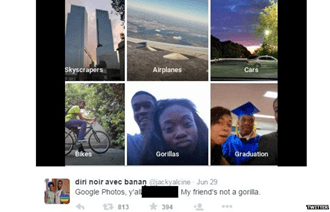

Thanks to the automatic image tagging feature of Photos, Google’s image storage and display application, images can be grouped according to their common visual characteristics. Even though this feature mostly works well, in 2015 Twitter user @jackyalcine shared a bug most people wouldn’t like. Photos app had tagged the photos of the user’s African-American friends as “Gorillas”.

Although Google has apologized then announced that they are “appalled” and would fix the problem, in 2018, Wired has uploaded thousands of images to the application for testing the image tagging algorithm of Photos, then shared the results. According to the article of Wired, the solution Google has found to solve the problem was not making the app tag images correctly but removing the tags “gorilla”, “chimp” and “monkey” completely from its image recognition algorithm.

AI Powered Camera Mistakes The Head of A Bald Referee with Ball

In 2020, Scottish football team Inverness Caledonian Castle FC has started broadcasting live with an AI powered camera system to enable fans to watch team matches under pandemic conditions. The camera, which was developed by Pixellot, was able to focus on the ball continuously by using image recognition.

According to Pixellot, although the camera performed quite well in the test matches, a problem showed up in the match against Ayr United: the camera kept confusing the ball with the head of a linesman. And it was so frustrating that one of the fans tweeted that he couldn’t watch the goal scored by the team, because the camera was focusing on the linesman’s head. According to Pixellot, who has spoken to the Verge, the fact that the linesman’s head was inside the field area in the video recordings, the ball was yellow and kinda similar with the linesman’s head caused this problem.

Data annotation has a critical role in the development of the AI models that enable Tesla’s autonomous driving software to detect and identify the objects on the road, Google’s Photo app to group and tag different images and AI powered camera system to recognize the soccer ball and focus on it. For example, when a dataset containing the photos of traffic lights is annotated and used to train the AI model, it ensures the AI model distinguishes traffic lights regarding their color. In addition, a comprehensive data set must be appropriately labeled in order for traffic lights in different environments or shapes to be recognized by the AI model. If the dataset used in the development of the AI model is not carefully annotated by an experienced team, the AI model may make mistakes in detecting and classifying different objects and this situation causes the problems encountered in the incidents we have examined. Moreover, data labeling should be conducted continuously and the AI model needs to be trained with new data to be prepared for edge cases that AI may encounter.

At Co-one, thanks to our working model that is built on perfect data labeling, cross validation and agile tracking, we deliver the labeled data set needed by the AI model you developed as soon as possible. By using the data set correctly labeled by an experienced team, the AI model can identify the inputs it encounters as desired and reach the appropriate result, so it can work without any bug. As Co-one, we are aware of how important high-quality data is for companies that are developing AI-powered products, so we’re constantly working to provide the fast and seamless data annotation service you need.

If you want to develop an artificial intelligence product that works perfectly by taking advantage of the data labeling service offered by Co-one, contact us!